Thinking about how to prevent big system project failure has somehow always reminded me of the Will Rogers quote: “Don’t gamble; take all your savings and buy some good stock and hold it till it goes up, then sell it. If it don’t go up, don’t buy it.”

In other words, with big projects, by the time you realize it’s failed, it’s pretty much too late. Let’s think a bit about the reasons why, and what we can do to change that.

First off, I’ve never seen a big project fail specifically because of technology. Ever. And few IT veterans will disagree with me. Instead, failures nearly always go back to poor communication, murky goals, inadequate management, or mismatched expectations. People issues, in other words.

So much for that admittedly standard observation. But as the old saying goes, “everyone complains about the weather, but no one does anything about it.” What, then, can we actually do to mitigate project failure that occurs because of these commonplace gaps?

Of course, that’s actually a long-running theme of this blog and several other key blogs that cover similar topics. (see my Blogroll to the right of this post). Various “hot stove lessons” have taught most of us the value (indeed, necessity) of fundamental approaches and tools such as basic project management, stakeholder involvement and communication, executive sponsorship, and the like. Those approaches provide some degree of early warning and an opportunity to regroup; they often prevent relatively minor glitches from escalating into real problems.

But it’s obvious that projects still can fail, even when they use those techniques. People, after all, are fallible, and simply embracing an approach or methodology doesn’t mean that all the right day-to-day decisions are guaranteed or that every problem is anticipated. Once again, there are no silver bullets.

One of the problems, as I’ve pointed out before, is that it can actually be surprisingly difficult to tell, even from the inside, how well a project is going. Project management documents can be appearing reliably, milestones met, etc. Everything looks smooth. Yet, it may be that the project is at increasingly large risk of failure, because you can’t address problems you haven’t identified. This is particularly so because the umbrella concept of “failure” includes those situations where the system simply won’t be adopted and used by the target group, due to various cultural or communication factors that have little or nothing to do with technology or with those interim project milestones.

Moreover, every project has dark moments, times when things aren’t going well. People get good at shrugging those off, sometimes too good. Since people involved in a project generally want to succeed, they unintentionally start ignoring warning signs, writing those signs off as normal, insignificant, or misleading.

I’ve been involved in any number of huge systems projects, sometimes even “death march” in nature. In many of them, I’ve seen the following kinds of dangerous “big project psychologies” and behaviors set in:

- Wishful thinking – we’ll be able to launch on time, because we really want to

- Self-congratulation – we’ve been working awfully hard, so we must be making good progress

- Testosterone – nobody’s going to see us fail. We ROCK.

- Doom-and-gloom fatalism – we’ll just keep coming in every day and do our jobs, and what happens, happens. (See Dilbert, virtually any strip).

- Denial – the project just seems to be going badly right now; things are really OK.

- Gridlock – the project is stuck in a kind of limbo where no one wants to make certain key decisions, perhaps because then they’ll be blamed for the failure

- Moving the goal posts – e.g., we never really intended to include reports in the system. And one week of testing will be fine; we don’t need those two weeks we planned on.

An adroit CIO, not to mention any good project leader, will of course be aware of all of these syndromes, and know when to probe, when to regroup, when to shuffle the deck. But sometimes it’s the leaders themselves who succumb to those behaviors. And for people on the project periphery, such as other C-level executives? It’s hard to know whom to listen to on the team, and it’s definitely dangerous to depend on overheard hallway conversations: Mary in the PMO may be a perennial optimist, Joe over in the network group a chronic Eeyore who thinks nothing will ever work, and so on. There are few, if any, reliable harbingers of looming disaster.

Wouldn’t it be great if there were some kind of codified, external measurement/evaluation tool that could methodically identify the kinds of disconnects that even well-led projects can fall prey to? One that could pinpoint where the true risk areas are as the project evolves, and help people take targeted action ahead of time to address those problem spots?

That’s why I got so excited in a recent conversation with well-known IT failure expert Michael Krigsman, CEO of Asuret, a company that sells “technology-backed services”. He gave me a look at their forthcoming product, an impressively slick, well-engineered tool that in my view promises to provide exactly that kind of benefit: identifying where and why a project might fail in terms of some of those people/best practices aspects, before it actually does.

In a nutshell, Asuret facilitates a cross-sectional analysis of project participants and stakeholders as the project proceeds. By aggregating the answers to its carefully crafted questions and constructing a number of easy-overview summary charts, the tool then displays astonishingly insightful visual breakdowns that let you pinpoint major disconnects, such as between stakeholder groups and IT, or between actual project-specific and industry-best practices.

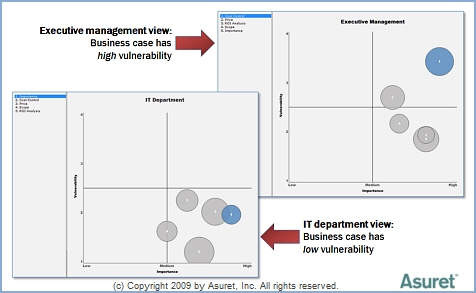

Let’s look at an example of what it shows you. By mapping aggregated analysis results onto charted dimensions of importance and vulnerability, and slicing these charts by department, you can see at a glance in the chart below that there’s a disconnect: e.g., that executives think that the business case for the project has high vulnerability, while the IT participants view it as having low vulnerability. Early warning sign! And certainly better (more methodical, more aggregated) than relying solely on what you’ve heard Joe grumbling about in the lunchroom.

In the example, the disconnect looms large: look at the darker circle (representing the participants’ responses to questions regarding the project’s business case) and its different location on the two grids shown below:

This all sounds simple in this brief description, perhaps, but taken as a whole, Asuret’s methodical implementation and targeted, useful results are nothing short of groundbreaking. Perhaps other companies provide a similar product, but I don’t know of any. And frankly, I can’t imagine a better-designed or more perfectly suited product as Asuret to address the issues raised in this post. I’m really looking forward to hearing more as they deploy and hone their product, because I can think of any number of large projects I’ve been on where this approach would have been revealing and useful.

It’s maybe not the ever-hoped-for holy grail, but it promises to be a small piece of it: an extension of our ability to see things before they happen. If Will Rogers had been an IT guy, I think he would have been excited too.

Great post, Peter.

I completely agree that standard project plans, status reports, and “delivery excellence” audits have significant limitations in assessing the impact and severity of failing projects, much less recommending solutions.

The Asuret tool seems to be a quantum leap forward in doing exactly that.

Hi Peter. I have also had the pleasure of seeing a demonstration of Assuret’s “groundbreaking” tool. I agree it is a thoughtful and thorough approach to addressing project failures. @mkrigsman has taken years of experience and insights and codified it into something special. I am glad you had a chance to see it and write this great post.

Steve Romero, IT Governance Evangelist

http://community.ca.com/blogs/theitgovernanceevangelist/

Peter, Thank you for the insightful comments on these important issue and also on how Asuret handles them.

As an independent third-party observer with no ties whatsoever to Asuret, your review is particularly valuable and represents an impartial view.

I have not had a chance to check out the Asuret tool but it sounds interesting. The one thing you did not address is no matter how people respond to questions there is nothing like getting them to respond to the working code. When you do this you get real insight into where your project sits. Thus, we have found the only way to reduct risk of project failure is to embrace change and iterative (agile) development.

This is the sure way to identify mis matches in expectations and keep your project moving in the right direction.

I think the title “CTO/CIO” highlights the core issue. The CTO is about delivery technologies CIO is about creation and use of business information. They are quite different skill sets.

The business application working with people who create all source information is about business logic. This is where historically the tools have largely failed to deliver as they were componentized and designed to be used by technical people who are often remote from users so the disconnect does indeed loom large. But business logic never really changes – unlike the delivery mechanisms (think of the pony express is now the internet?). With this in mind a UK company Procession set about trying to solve the “IT” problem which would remove the need for programmers to build custom applications reflecting exactly what the business user wanted and supporting constant change.

In 2008 Bill Gates said “Most code that’s written today is procedural code. And there’s been this holy grail of development forever, which is that you shouldn’t have to write so much code,” Gates said. “We’re investing very heavily to say that customization of applications, the dream, the quest, we call it, should take a tenth as much code as it takes today.” “You should be able to do things on a declarative basis,” “We’re not here yet saying that [a declarative language has] happened and you should write a ton less procedural code, but that’s the direction the industry is going,” Gates said. “And, despite the fact that it’s taken longer than people expected, we really believe in it. It’s something that will change software development”

Gates is right and it is exactly what Procession delivered some 10 years earlier Using such advanced tools is the real answer to closing that disconnect and eliminating business software failure. Good methodologies in project management can reduce risk of failure but no substitute for using such tools as described by Gates and now available.

Well, thanks for commenting, Dave, but much of what you write is along the lines of Things I Fundamentally Disagree With. I’ve written recently about how there are No Silver Bullets, and the kind of claims made by Procession are precisely to promise it as a silver bullet; to wit: “There is now no need to custom code any applications, or buy inflexible off the shelf software, which rarely solve specific business problems. ” I’m sorry, but this is a patently non-credible claim, one that at best can be viewed as vastly overstating its case. If Procession, which I’m sure is a fine company with an interesting product (albeit one I’ve never heard of in any context) had indeed delivered this holy grail ten years ago, as you say, it’d be considerably better-known.

As for CTO and CIO being completely different roles, this again is something I’ve written at length about, and I disagree entirely with your statement. I’d point, again, to what one actually sees in the real world, where the two terms are used quite often interchangeably. And no, the CTO is not (and must never be) about simply the delivery of technologies, even in companies that strongly differentiate between the two roles.

Peter

Well it is true a real third alternative to COTS or coded custom build; the ultimate flexible packaged application where the core code never changes. No code generation no compiling to build any business driven requirement recognising people are the source of all information. When you get right back to basics what people need to support them in their daily work there are less than 13 work tasks, human and system, including the important user interface that can address any business issue. So make them configurable “objects” build flexible links, put into contained data centric environment, put a graphical interface at build and a whole new world opens up in just one unified tool.

Think about it what are we doing in the 21st century coding and recoding over and over business logic that never changes – people doing something to achieve the individual and collective outputs. That’s what we recognised as a core issue and our unique design philosophy addresses. In effect we have separated business logic from the delivery technologies. Someone had to do it …..?

This has been a long journey lead by business thinking. We were 10 year ahead of our time and face huge vested interests. You may have heard of the “innovators dilemma” which applies to the dominant suppliers “If they adopt or make new products that are simple to implement and easy to use, they will lose their massive streams of services revenue. Their sales models are based on selling big deals. A switch to simplicity will crater their businesses”.

Why have you not heard of us? Well for starter we are in UK a virtual desert of enabling software technologies where very difficult to get real understanding of the issues. The likes of Gartner know about us but ignore us. Let’s face it we could crater their business as they rely on continuing complexity to drive their revenues feeding off both plates but not ours! We are also challenging the largest vendors in the US, a real David and Goliath(s) battle. Everyone that comes and sees gets it and is truly impressed. Even we have had difficulty in articulating what we have so coming in fresh will understandably be a challenge.

Bill Gates knew about Procession when that statement was made and by good fortune we did market and sell a little in the US in 2000 which will effectively block any meaningful patents from any one including Procession – see letters on our web site. Interesting some research in Australia with a strong EU connection and heading the same way see http://www.yawlfoundation.org/ .

Get ready it will change the whole distribution model and put business in the driving seat for their applications – that’s what disruptive technology does. A good example is a UK Government contract won by Indian company to build a new application involving people seeking help. Price £50m but thinking people and process should be no more that £5m and all work carried out locally working directly with end users. Because of the speed of build and the inbuilt flexibility there is no longer a requirement to have full specification before build starts. The build is lead by business analyst skills supporting change during build and for continuous improvement making it a future proof investment.

We are just at the start of this journey and more to come. We already have our own cache in memory environment and a comprehensive tag library where in the build linking the user interface to the data source is by mark up not coding. There is no need for a custom data model making change quick and relatively easy. We have in production our own web page development environment using our existing client server one and a pluggable architecture in the tag library for ready access to any data source to be presented on the user form which can be seamlessly incorporated into the end to end process. This will enhance our existing capabilities to communicate with legacy systems and in the future reduce reliance on old systems.

Is this the “silver bullet”? That’s for you to judge but please believe me it works and has been well proven by early adopters as very robust and does deliver exactly as it says on the box and more – unlike most vendors!

Going direct to US is not on our radar screen too difficult with too many enemies. China is……! Let me leave you with a rather profound quote made by Nicolai Machiavelli – 500 years ago

“Nothing is more difficult than to introduce a new order, because the innovator has for enemies all those who have done well under the old conditions and lukewarm defenders in those who may do well under the new”

I welcome your comments and as when you “get this” you will I hope become a convert and perhaps help educate the market that there is hope for business software……?

Wonderful article , with my little experience of 20 years in IT, automative, aerospace programs I fully agree with causes of proejct failure poor communication, murky goals, inadequate sponsorship , people issue etc . Hardward Business review also had published a paper on lack of communiction yields to failure

I have repeated this many times including just last week “I’ve never seen a big project fail specifically because of technology. ”

So true. There are a 1000 ways to fail and few paths to success.

Keep spreading the word. Out profession needs to do much better.